Research Associate

Oct 2024 - Jan 2025

University of Birmingham

Oct 2024 - Jan 2025

Hey there! I’m a Software & Machine Learning Engineer based in the UK, with roots in India and a strong foundation in Computer Science. I hold a Master’s degree in CS with a focus on Human-Computer Interaction from the University of Birmingham. I’m passionate about using AI and machine learning not just to solve tough problems, but to build thoughtful, user-centered solutions that make a real impact. Whether it’s writing clean, scalable code or collaborating on open-source projects, I love blending technical depth with human-focused design. Currently, I’m exploring new opportunities where I can combine my skills in AI, ML, and software engineering to build innovative, meaningful tech. Let’s connect if you're working on something exciting—or just want to geek out over cool ideas!

Oct 2024 - Jan 2025

University of Birmingham

Oct 2024 - Jan 2025

Jun 2024 - Aug 2024

Our Time HQ

Jun 2024 - Aug 2024

Oct 2023 - Apr 2025

Inzeitech Digital Solutions

Oct 2023 - Apr 2025

80%

90%

80%

75%

80%

85%

85%

80%

90%

85%

70%

75%

85%

90%

85%

90%

85%

80%

75%

80%

95%

90%

80%

80%

90%

80%

80%

85%

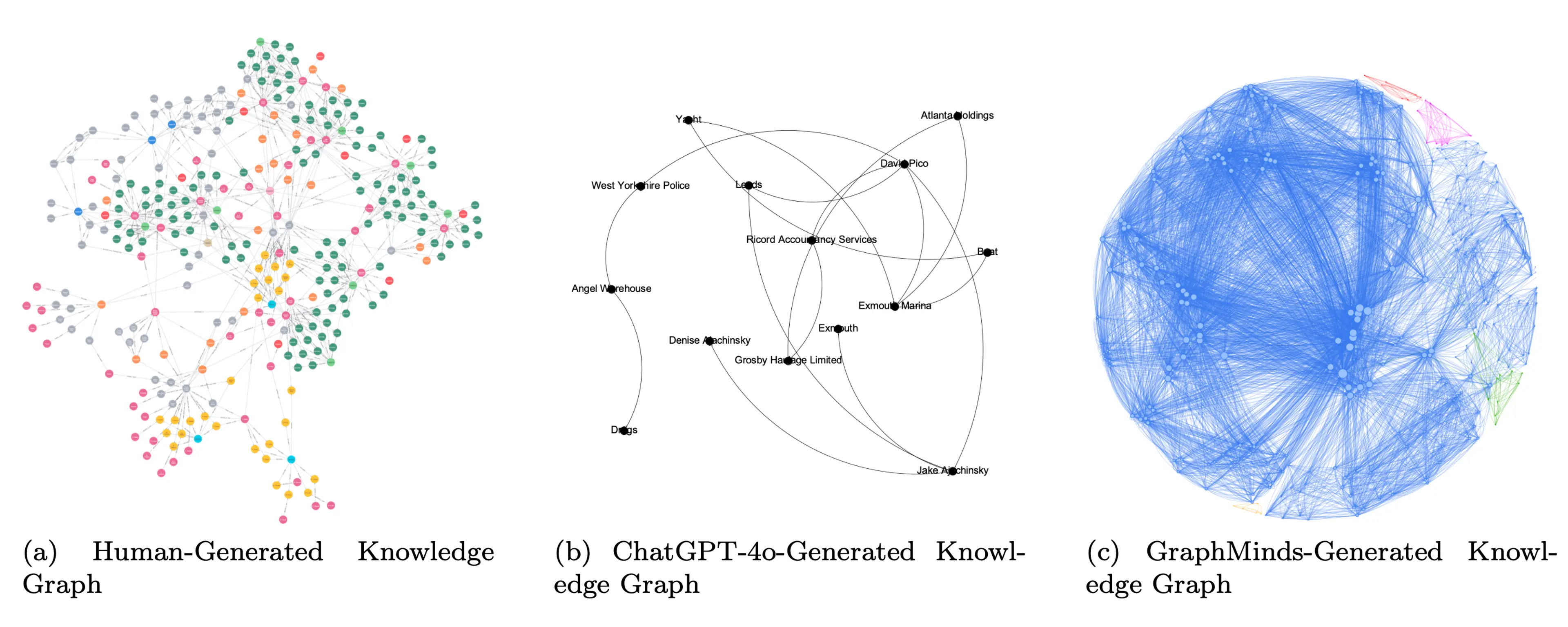

Developed GraphMinds, a security-focused AI system that combines Large Language Models with Knowledge Graphs to analyse complex data locally. Designed an innovative approach for processing unstructured information by mapping both direct and indirect relationships between entities in knowledge graphs. Implemented the entire system locally using Sentence Transformers for embeddings, NetworkX for graph visualisation, and Ollama for LLM integration, ensuring data confidentiality without cloud dependencies. Project successfully enhances analysis of fragmented datasets, particularly valuable for knowledge-intensive applications requiring comprehensive relationship mapping. Completed under supervision of Prof. Christopher Baber as part of my MSc in Human-Computer Interaction at the University of Birmingham.

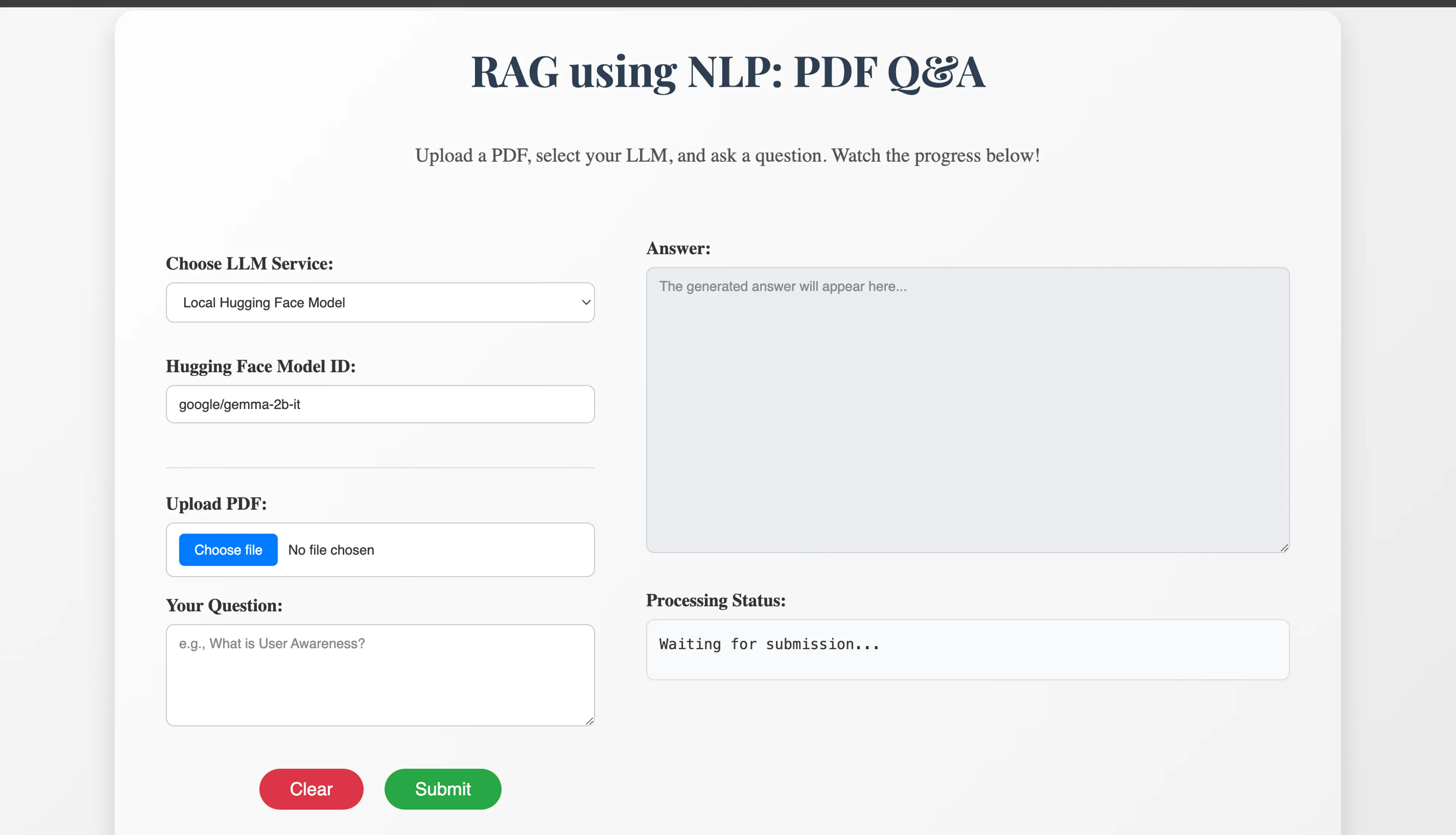

Designed and implemented a high-performance RAG pipeline that enables accurate question-answering from PDF documents while maintaining complete data privacy through local GPU acceleration. The system processes documents up to 500 pages using sophisticated NLP techniques—including semantic chunking and vector-based retrieval with FAISS—achieving 87% information retrieval accuracy with reduced hallucinations. Developed with a modular architecture supporting multiple LLM providers (OpenAI, Gemini, Groq, and local models), the project features an intuitive FastAPI web interface with Server-Sent Events for real-time processing feedback, resulting in a 40% reduction in query-to-answer latency through advanced parallel processing techniques. The implementation demonstrates expertise in machine learning, NLP, GPU optimization, and modern software engineering practices while delivering a production-ready solution for private document intelligence.

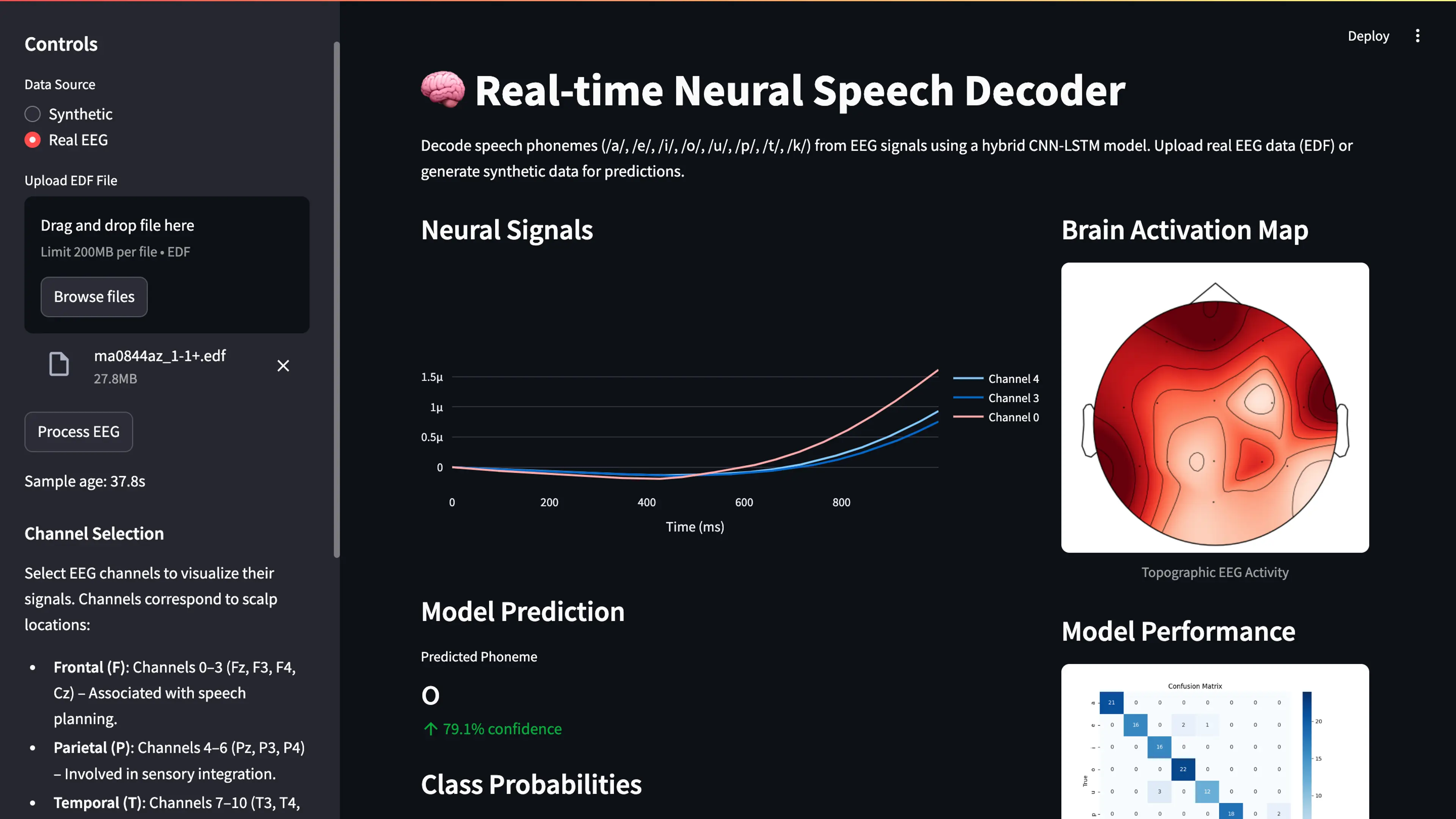

Developed a brain-computer interface to decode speech sounds from EEG signals, training a model to recognise eight distinct speech phonemes with a 92.5% F1-score on synthetic data, while creating an interactive app that displayed topographic scalp maps and used visualisation techniques to show how the model interprets brain activity, making it easier for researchers to analyse results in real-time and support cognitive studies, with added reliability through cross-validation, hyperparameter tuning, and data augmentation.

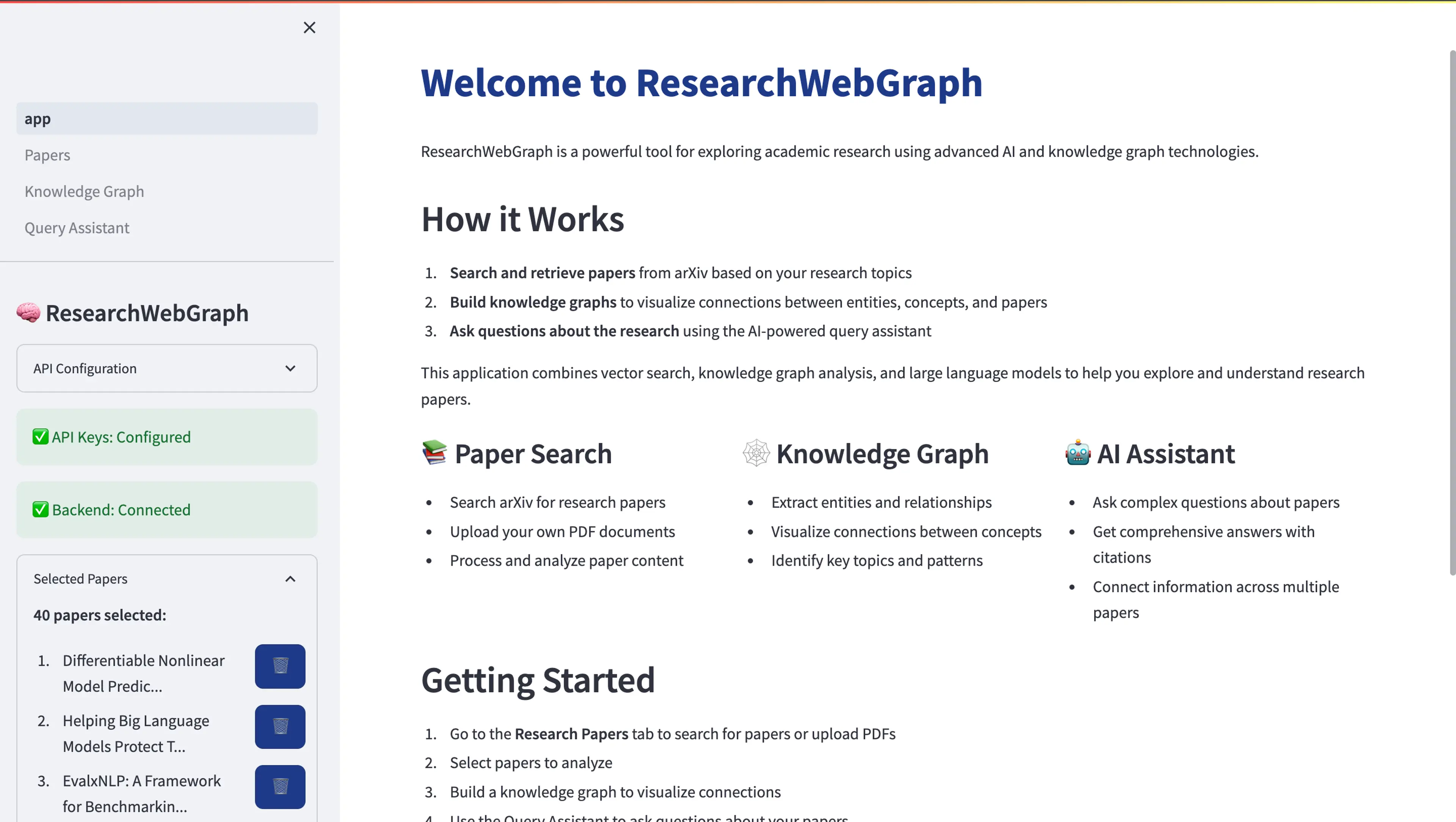

Built an AI-powered tool to help researchers tackle academic papers more efficiently, letting them upload PDFs to run semantic searches, extract key entities like concepts, authors, and references, and even answer specific questions about the content using a retrieval-augmented generation pipeline, while also creating knowledge graphs to map out relationships between studies, summarize papers, and highlight connections, all wrapped in a straightforward interface that made these insights easy for nontechnical users to dive into, boosting engagement by 45% among 10 beta testers.

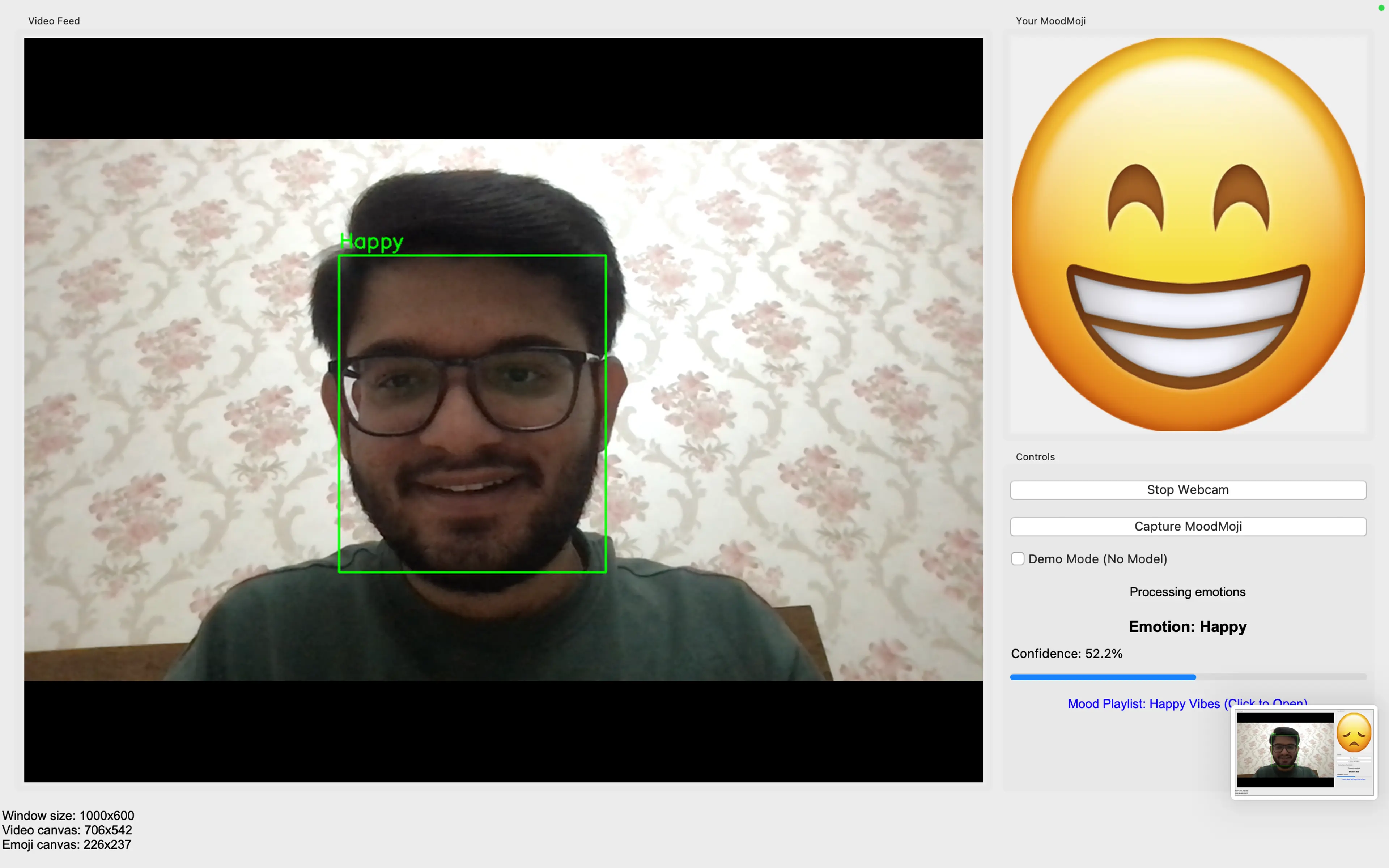

Developed a real-time facial emotion recognition app that analyzes webcam video to detect emotions and pairs them with custom emojis, training a convolutional neural network on the FER2013 dataset to classify seven emotions—angry, disgust, fear, happy, neutral, sad, and surprise—and designing an interactive GUI to show the live video feed alongside matching emojis, highlighting skills in deep learning, computer vision, and interface development.

+44 7741918549

tirthkanani18@gmail.com

London, United Kingdom

Crafted with ❤️ by Tirth